You might say "I think, therefore I am," but do you really know what you mean when you say "I"?

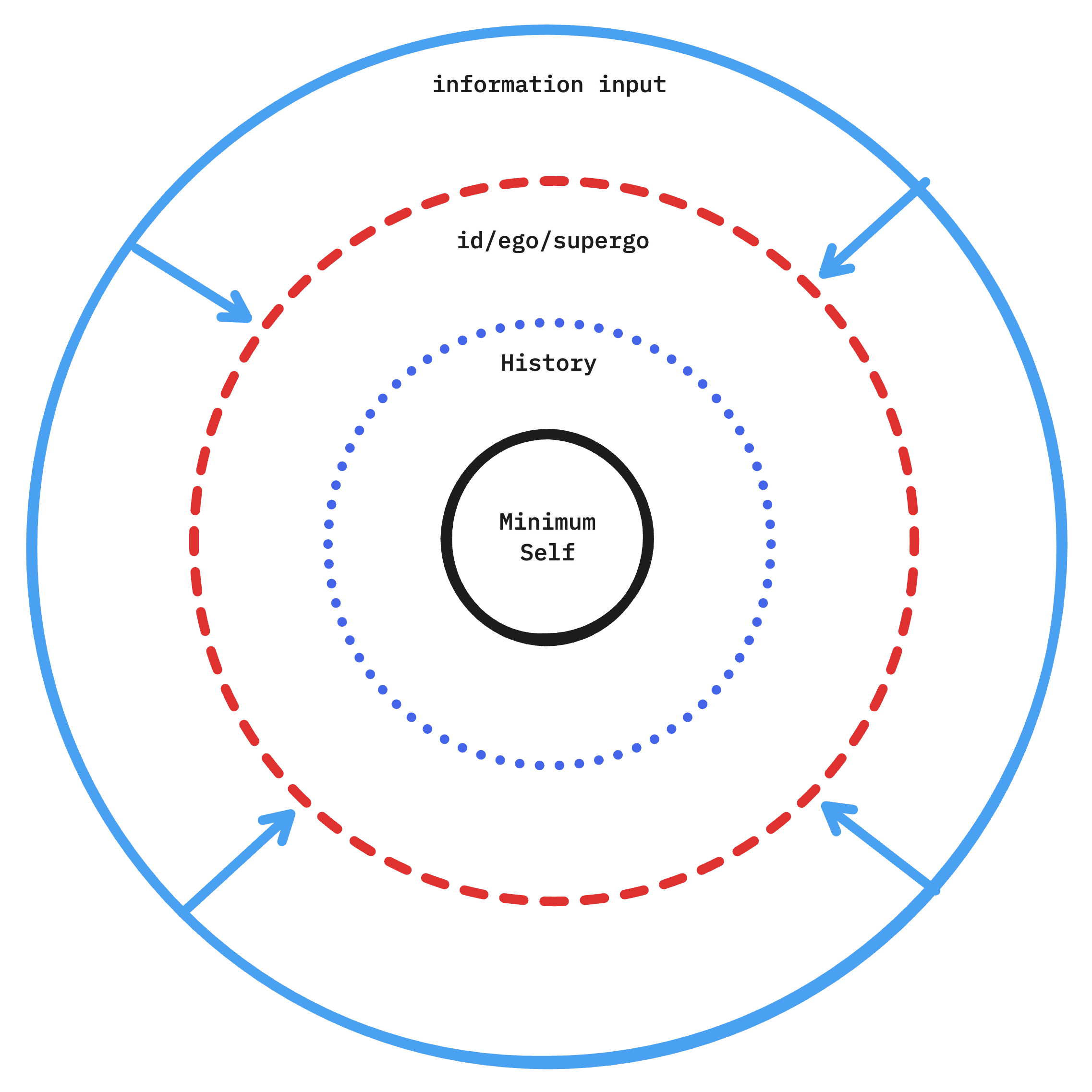

It seems like I'm real because "I think." Before I think, I observe. I observe a phenomenon—some set of information. My lowest-level Self—minimum Self—seems to just be something that observes phenomena, devoid of any sensory input. If I removed my sight, taste, hearing, feeling, and smelling, I feel like I would still be there (in a void)— I wouldn't just disappear. Maybe when I die?

The minimum Self seems to be a pure phenomenon-observing consciousness. Let's define history as the set of a minimum Self's past observations of phenomena. I believe history is what separates you from me—everyone's life experiences are different—making every person different. But if you compared your minimum Self to mine, we would be the same person. It seems like every conscious being—or at least humans from what I currently understand—has the same exact minimum Self. So when you interact with others, you're just interacting with yourself—the foundational layer of consciousness in the universe. Your history just blankets your minimum Self.

You can stack the id, ego, and superego above the minimum Self and history (hardware memory). These higher-level emergent agents seem to be in a perpetual power struggle between each other, similar to a three-species biological system. Those agents (high-level software) take in sensory input from the body (hardware) and the weighted sum of their power-struggle manifests as decisions (actions) that our hardware perform. There is constant Bayesian updating going in as our internal state (software + hardware) interact with exterior systems (new information). Some observed phenomena from this are visible when we interact in social systems, with other humans, like mimetic desire. This is visible in our closest relatives and probably true in every other living being as we understand them more. The proposition that your minimum Self and an ape's minimum Self are identical may not be so far-fetched. Even you and a flatworm may not be out of the question—the simplest life-form with a central nervous system.

I thought about this last December, but this new model that I've first-principled via conversations with Allan this summer is a higher resolution view of the Self. We (Spend some time descending deeply, all the way down to your minimum Self. Easiest with sensory deprivation in a pitch dark, quiet room) didn't program our software or design our hardware. None of our decisions are truly made by us—rather higher-level agents responding to our internal + external state conditions. We're just observing phenomena. The only thing I can tell you to do is to be.