There's a lot more clarity on what to build at Verdus now.

Every farmer we spoke to said labor was the ultimate problem. We'll solve it. Our team can definitely get there... with infinite capital (and someone that actually knows ML). But how can we get there as a team with no money or leverage to raise venture capital? Time-rich, capital-poor. At least we're not time-poor and capital-rich.

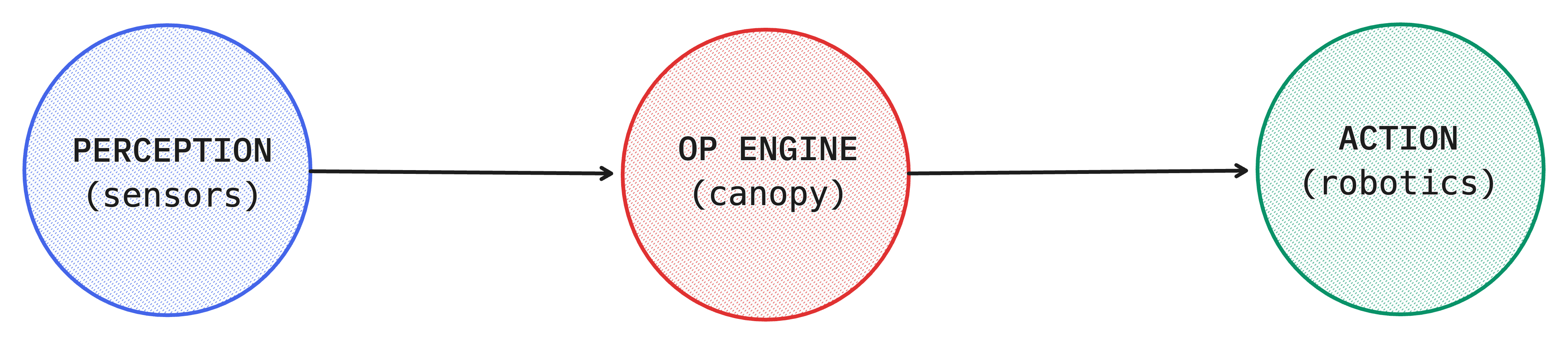

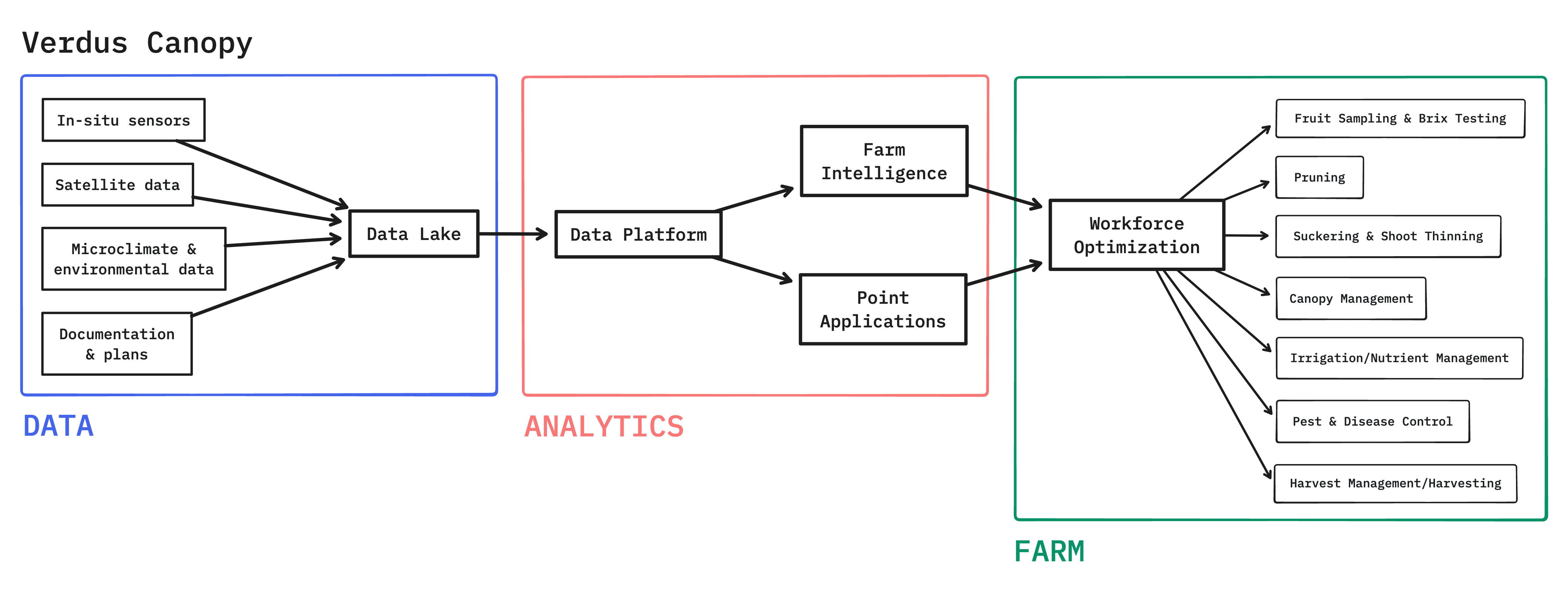

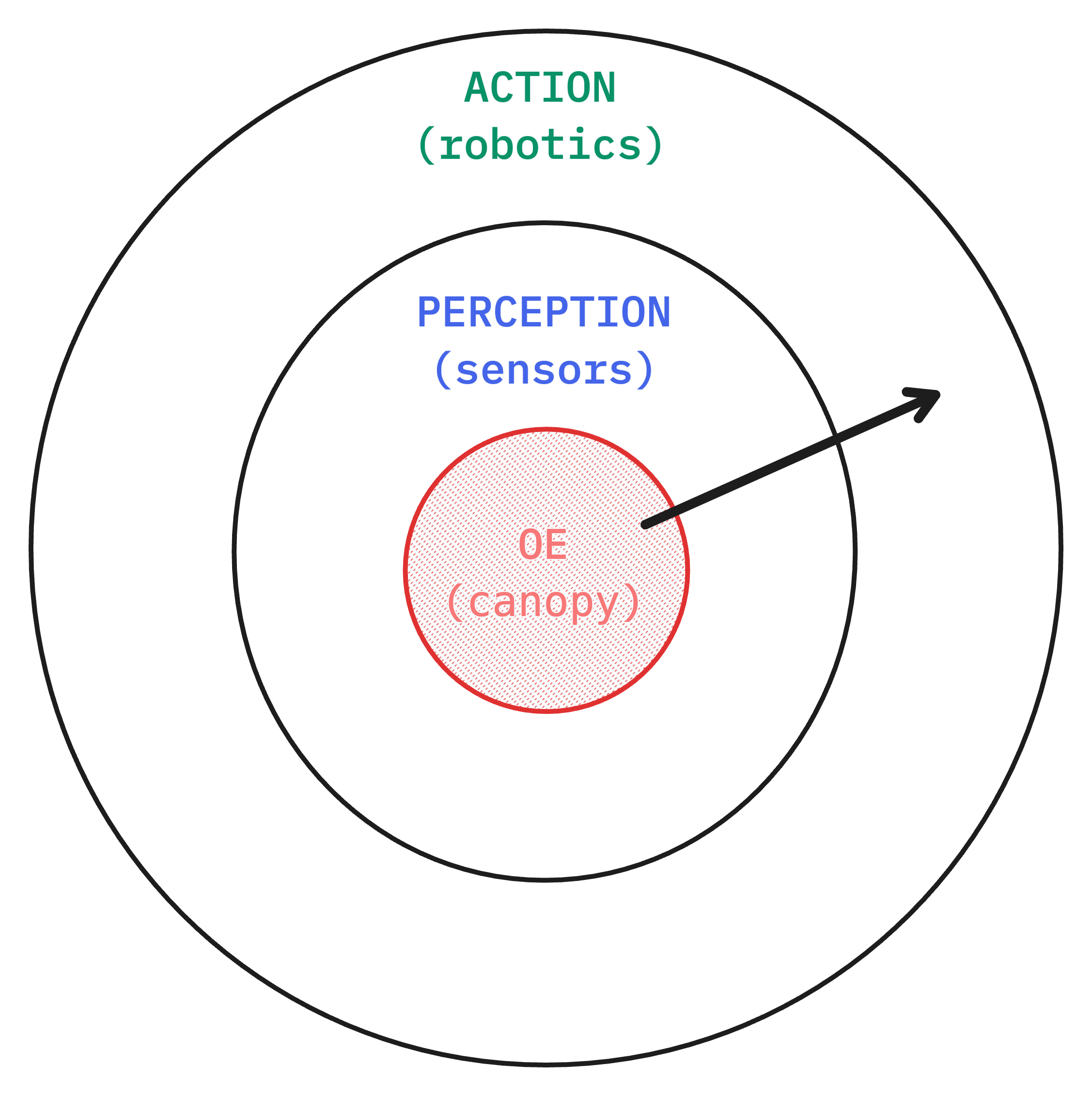

Before, we were trying to solve the perception problem with drones. Drones that go through vine rows and capture extremely high resolution lateral imagery. This would allow mildews, viruses, and pests to be caught—and coupled with microclimate and in-situ sensor data—catch declining plant health signals. Since we can perceive beyond the visual spectrum with sensors, it's possible to catch things before they're visible to humans. We viewed the operating engine (data -> models -> labor) as an ancillary component. But it's the best way forward given our updated information set.

California vineyards have a variety of sensor inputs today—from remote sensing NDVI and soil moisture on a block/field resolution to ATV-mounted imagery. But the data isn't that useful since farmers look at several dashboards, make assessments, and deploy their workforce. No operating system exists that can take a ton of unstructured messy data inputs in different formats and direct real farm labor in natural language. It's basically Palantir Foundry but for farms specifically. We're reinventing the wheel to some degree but (1) farms aren't using Foundry, (2) we want a fully native platform, (3) there's money.

With farms using our operating engine, vertically integrating the perception stack would be much easier. It'd kind of be like building an Apple ecosystem, but starting with MacOS. We'd move to visual sensing since that would have the largest step-function value add and later to microclimate/environmental sensors.

The grand challenge is full action autonomy. A robot that can thin, vertically shoot position, prune, harvest, and even graft. I have no idea what the robot would look like—anything from drones with mutable arms to a humanoid. It would be impossible to achieve that without perception + the OE working together. At that point, the perception-OE-action ecosystem would be impossible for farmers to leave. Or maybe we wouldn't need farmers at that point. Hopefully not on Mars. Definitely not beyond the Oort Cloud.

Other cool things on the OE side are highly accurate digital twins. Agricultural data moats exist right now since you can't collect a seasons worth of data in a small period of time, like it is for foundational LLM pretraining data. But if you could run a million years in simulation in a day, you'll know how most years will look like given a set of environmental conditions. And you can do things like train reinforcement learning drones to learn how to fly through rows and record the most useful data (rather than classically telling them to obstacle-avoid). Accuracy will be a major painpoint and Gaussian splatting might not be good enough. In the long-run, we might need to build a computational plant at the cellular level to grow virtual plants from conception. And then we can make virtual forests. Then add virtual humans in them. Low marginal returns at that point probably. But we might be omniscient.